Fix race conditions: stop flaky async bugs in your app

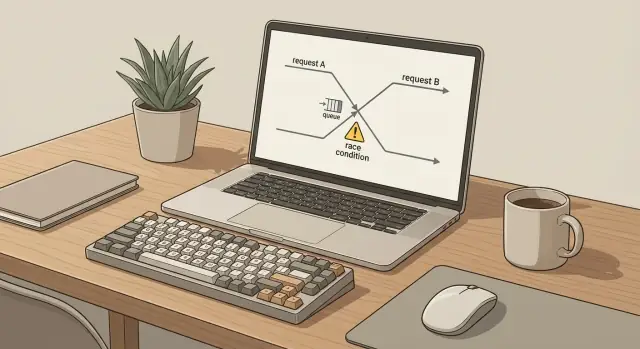

Learn how to fix race conditions by spotting non-deterministic behavior in queues, web requests, and state updates, then stabilizing it.

What flaky async bugs look like in real life

A flaky async bug is when you do the same thing twice and get two different results. You click the same button, submit the same form, or run the same job, but the outcome changes. One time it works. The next time it fails, or it half-works and leaves data in a weird state.

Async work makes this more likely because tasks do not finish in a neat line. Requests, queue jobs, timers, and database writes can overlap. The order can change based on small timing differences: network delays, a slow database query, a retry, or one user doing the same action twice.

That is why race conditions often feel “random.” The bug is real, but it only shows up when two things happen in the unlucky order. For example:

- A user double-clicks “Pay now” and two requests create two orders.

- A background job retries after a timeout, but the first attempt actually succeeded.

- Two tabs update the same profile, and the last response overwrites the newer data.

- A webhook arrives before the record it depends on is committed.

You do not need to be an expert to diagnose these. If you can answer “what happened first?” you are already doing the right kind of thinking. The goal is to stop guessing and start observing: which actions ran, in what order, and what each one believed the current state was at that moment.

Where race conditions usually hide

Race conditions rarely sit in the obvious line of code you are staring at. They hide in the gaps between steps: after a click, before a retry finishes, while a background job is still running. If you are trying to fix race conditions, start by mapping every place work can happen twice or in a different order than you expected.

A common hiding spot is queues and background jobs. One event turns into two jobs (or the same job is retried), and both run fine on their own. Together they create duplicates, process out of order, or trigger a retry storm that makes the system look random.

Web requests are another classic source. Users double click, mobile networks retry, and browsers keep parallel tabs alive longer than you think. Two requests hit the same endpoint, each reads the same old state, then both write back, and the last write quietly wins.

State updates are where things get subtle. You might have two updates that are both valid, but they collide. A later update can overwrite a newer value because it started earlier, or because the code assumes it is the only writer.

Here are the places to check first:

- Queue consumers and workers that can run concurrently

- Cron jobs that can overlap when one run is slow

- “Retry on failure” logic that is not idempotent

- Third-party API calls that can partially succeed before timing out

- Any flow that does read-modify-write without a guard

Example: an AI-generated app sends a “welcome email” from a web request, and also from a background job “just in case.” Under load, both paths fire, and you see duplicates only sometimes. The bug is not the email code. It is the missing rule about who is allowed to send, and how many times.

Fast signals that point to non-determinism

Non-deterministic bugs feel like bad luck: a 500 error appears, you hit refresh, and it works. That “disappears on retry” pattern is one of the clearest signs you are dealing with timing, not a single broken line of code.

Watch what happens to data. If a record is sometimes missing, sometimes duplicated, or sometimes overwritten by an older value, something is updating the same thing from two places. It often shows up as “payment captured twice,” “email sent twice,” or “profile saved but fields reverted.”

The logs usually tell on you, even when the bug won’t. If you see the same action twice with the same user and payload (two job runs, two API calls, two webhook handlers), assume concurrency or retries until proven otherwise.

Quick signals to look for

These patterns show up again and again when you need to fix race conditions:

- Bug reports that say “I cannot reproduce,” especially across different devices or networks

- Errors that spike when traffic is higher or the database is slower

- A workflow that fails only when multiple tabs are open or users double-click

- A job queue that “sometimes” processes out of order or runs duplicates after retries

- Support tickets that cluster around timeouts, retries, or degraded performance

A concrete example: two checkout requests arrive close together (double-click + slow response). Both see “inventory available,” both reserve it, and only one later fails. The retry then “fixes it,” masking the real issue.

If you inherited an AI-generated prototype, these symptoms are common because async flows are often glued together without clear ownership. FixMyMess audits these paths quickly by tracing each request and job run end to end before touching the code.

Instrument first: the minimum logging that pays off

When you try to fix race conditions by “waiting a bit” or adding sleeps, you usually make the bug harder to see. A few well chosen logs will tell you what is actually happening, even when the failure is rare.

Start by giving every user action or job run a correlation ID. Use it everywhere: the web request, the queue job, and any downstream calls. When someone reports “it failed once,” you can pull one thread through the whole system instead of reading unrelated noise.

Log the start and finish of each important step, with timestamps. Keep it boring and consistent, so you can compare runs. Also record the shared resource being touched, like the database row ID, cache key, email address, or file name. Most flaky async bugs happen when two flows touch the same thing in a different order.

A minimal logging set that pays off:

- correlation_id, user_id (or session_id), and request_id/job_id

- event name and step name (start, finish)

- timestamp and duration

- resource identifiers (row ID, cache key, filename)

- retry_count and error_type (timeout vs validation vs conflict)

Make logs safe. Never print secrets, tokens, raw passwords, or full credit card data. If you need to confirm “same value,” log a short fingerprint (like the last 4 characters or a masked version).

Example: a user clicks “Pay” twice. With correlation IDs and start/finish logs, you can see two requests racing to update the same order_id, one retrying after a timeout. This is the point where teams often bring FixMyMess in: we can audit the codebase and add the right instrumentation before touching the logic, so the real cause becomes obvious.

Create a reliable reproduction without guessing

Flaky async bugs feel impossible because they disappear when you watch them. The fastest way to fix race conditions is to stop “trying things” and build a repeatable failing case.

Start by choosing one flow that fails and write the expected sequence in plain words. Keep it short, like: “User clicks Pay -> request creates order -> job reserves inventory -> UI shows success.” This becomes your baseline for what should happen, and what is happening instead.

Now make the bug easier to trigger by turning up the pressure on timing and concurrency. Don’t wait for it to occur naturally.

- Force parallel actions: double-click, open two tabs, or run two workers against the same queue.

- Add delay on purpose in the suspicious step (before a write, after a read, before an external API call).

- Slow the environment: throttle network, add DB latency, or run the task in a tight loop.

- Reduce scope: reproduce in the smallest handler or job you can isolate.

- Write down the exact inputs and timing setup so anyone can rerun it.

A concrete example: a “Create Project” button sometimes makes two projects. Put a 300ms delay right before the insert, then click twice quickly or submit from two tabs. If you can trigger duplicates 8 out of 10 times, you have something you can work with.

Keep a short repro script (even a few manual steps) and treat it like a test: run it before and after every change. If you’re inheriting an AI-generated app, teams like FixMyMess often start by building this repro first, because it turns a vague complaint into a measurable failure.

Step by step: stabilize the flow instead of chasing timing

When you try to fix race conditions by “adding a delay” or “waiting for it to finish,” the bug usually moves somewhere else. The goal is to make the flow safe even when things happen twice, out of order, or at the same time.

Start by naming the shared thing that can be corrupted. It is often a single row (user record), a counter (balance), or a limited resource (inventory). If two paths can touch it at once, you have the real problem, not the timing.

A practical way to fix race conditions is to pick one safety strategy and apply it consistently:

- Map who reads and who writes the shared resource (request A, job B, webhook C).

- Decide on the rule: serialize work (one at a time), lock the resource, or make the operation safe to repeat.

- Add an idempotency key for any action that might be retried (user double click, network retry, queue redelivery).

- Protect writes with a transaction or a conditional update so you do not lose someone else’s change.

- Prove it with concurrency tests and repeated runs, not a single “looks good on my machine.”

Example: two “Place order” requests hit at once. Without protection, both read inventory=1, both subtract, and you ship two items. With idempotency, the second request reuses the first result. With a conditional update (only subtract if inventory is still 1) inside a transaction, only one request can succeed.

If you inherited AI-generated code, these safeguards are often missing or inconsistent. FixMyMess typically starts by adding the minimum idempotency and safe-write rules, then reruns the same scenario dozens of times until it stays boring.

Queues and background jobs: duplicates, retries, ordering

Most queues deliver jobs at least once. That means the same job can run twice, or run after a newer job, even if you only clicked the button once. If your handler assumes it is the only run, you get weird results: double charges, duplicate emails, or a record flipping between two states.

The safest approach is to make each job safe to repeat. Think in outcomes, not attempts. A job should be able to run again and still end up with the same final state.

A practical pattern that helps you fix race conditions in background processing:

- Add an idempotency key (orderId, userId + action + date) and store “already processed” before doing side effects.

- Record a clear job status (pending, running, done, failed) so re-runs can exit early.

- Treat external calls (payment, email, file upload) as “do once” steps with their own dedupe checks.

- Guard against out-of-order events using a version number or timestamp, and ignore older updates.

- Separate retryable failures (timeouts, rate limits) from non-retryable ones (bad input), and stop retrying when it will never succeed.

Ordering issues are easy to miss. Example: a “cancel subscription” job runs, then a delayed “renew subscription” job arrives and re-activates the user. If you store a monotonically increasing version (or updatedAt) with each subscription change, the handler can reject stale messages and keep the newest truth.

Be careful with global locks. They can hide the bug by slowing everything down, then hurt you in production by blocking unrelated work. Prefer per-entity locking (one user or one order at a time) or idempotency checks.

If you inherited an AI-generated queue worker that randomly duplicates work, teams like FixMyMess often start by adding idempotency and “stale event” checks first. Those two changes usually turn flaky behavior into predictable outcomes fast.

Web requests: double clicks, retries, and parallel sessions

Most flaky request bugs happen when the same action is sent twice, or when two actions arrive in the “wrong” order. Users double click, browsers retry on a slow network, mobile apps resend after a timeout, and multiple tabs act like separate people.

To fix race conditions here, assume the client can and will send duplicates. The server must be correct even if the UI is wrong, slow, or offline for a moment.

Make “do the thing” endpoints safe to repeat

If a request can create side effects (charge a card, create an order, send an email), make it idempotent. A simple approach is an idempotency key per action. Store it with the result, and if the same key shows up again, return the same outcome.

Also watch timeouts. A common failure is: the server is still processing, the client times out, then retries. Without dedupe, you get two orders, two password reset emails, or two “welcome” messages.

Here’s a quick set of protections that usually pay off:

- Require an idempotency key for create/submit endpoints, and dedupe on (user, key)

- Use consistent error responses so clients retry only on safe errors

- Log a request ID and the idempotency key on every attempt

- Put side effects after you’ve persisted the “this action happened” record

- Treat “already done” as success, not as a scary error

Stop parallel requests from overwriting state

Overwrites happen when two requests read the same old state, then both write updates. Example: two tabs update a profile and the last one wins, silently undoing the other.

Prefer server-side checks like version numbers (optimistic locking) or explicit rules like “only update if status is still PENDING”. If you inherited AI-generated handlers that do read-modify-write without guards, this is a common place FixMyMess sees random user reports that “sometimes it saves, sometimes it doesn’t”.

State updates: preventing overwrites and inconsistent data

A lot of flaky behavior is not “async” in a queue or network. It is two parts of your app updating the same piece of state, in a different order each run. One request wins today, the other wins tomorrow.

The classic problem is a lost update: two workers read the same old value, both compute a new value, and the last write overwrites the first. Example: two devices update a user’s notification settings. Both read “enabled”, one turns it off, one changes the sound, and the final record silently drops one change.

When you can, prefer atomic operations over read then write. Databases often support safe primitives like increment, compare-and-set, and “update where version = X”. That turns timing into a clear rule: only one update can succeed, and the loser retries with fresh data.

A second fix is to validate state transitions. If an order can only move from pending -> paid -> shipped, reject shipped -> paid even if it arrives late. This prevents late requests, retries, or background jobs from pushing state backward.

Caching can make this worse. A stale read from a cache can trigger a “correct” write based on old data. If you cache state that drives writes, make sure you either bust the cache on updates, or read from the source of truth right before writing.

One simple way to fix race conditions is ownership: decide one place that is allowed to update a piece of state, and route all changes through it. Good ownership rules usually look like this:

- One service owns the write, others only request changes

- One table or document is the source of truth

- Updates include a version number (or timestamp) and are rejected if stale

- Only allowed state transitions are accepted

At FixMyMess, we often see AI-generated apps update the same record from UI code, API handlers, and background jobs at once. Making ownership explicit is usually the fastest way to stop inconsistent data.

Common traps that keep flaky bugs alive

The fastest way to waste a week on flaky async bugs is to “treat the clock” instead of the cause. If the bug depends on timing, you can make it disappear for a day and still ship it.

One common mistake is adding more retries when you do not have idempotency. If a payment job fails halfway through and then retries, you might charge twice. Retries are only safe when each attempt can run again without changing the outcome.

Another trap is sprinkling random delays to “spread out” collisions. It often hides the problem in staging, then makes production worse because load patterns change. Delays also make your system slower and harder to reason about.

Big locks can also backfire. A single giant mutex around “the whole flow” may stop the flake, but it can create a new failure mode: long waits, timeouts, and cascading retries that bring the bug back in a different form.

Watch for these patterns that keep non-deterministic behavior alive:

- Treating every failure as retryable (timeouts, validation errors, auth errors, and conflicts need different handling)

- Declaring victory because it passed locally once (real concurrency rarely shows up on a quiet laptop)

- Logging only “error happened” without a request/job id, attempt number, or state version

- Fixing symptoms in one layer while the race still exists underneath (UI, API, and worker can all overlap)

- “Temporary” hacks that become permanent (extra retries, sleeps, or catch-all exception blocks)

If you inherited an AI-generated codebase with these band-aids, a focused audit can quickly show where retries, locks, and missing idempotency are masking the real race. That is the point where a targeted repair beats more guessing.

Quick checklist before you ship the fix

Before you call it done, make sure you are not just “winning the timing lottery.” The goal is to fix race conditions so the same inputs produce the same outcome every time.

Here’s a quick pre-ship checklist that catches most repeat offenders:

- Run it twice on purpose. Trigger the same action two times (double click, two workers, two tabs) and confirm the result is still correct, not just “not broken.”

- Look for the one shared thing. Identify the shared resource (row, file, cache key, balance, inbox) and decide how it is protected: transaction, lock, unique constraint, or conditional update.

- Audit retries for side effects. If a retry happens, do you send another email, charge again, or write a duplicate row? If yes, add idempotency so “same request” equals “same effect.”

- Compare ordering in logs. In a good run vs a bad run, do events arrive in a different order (job started before record exists, callback before state saved)? Ordering differences are a big clue you fixed symptoms, not the cause.

- Prefer atomic guarantees over sleeps. If a transaction, unique index, or “update only if version matches” removes the bug, that’s usually safer than adding delays.

Example: if “Create subscription” sometimes charges twice, verify that the payment provider call is keyed by an idempotency token, and your database write is guarded by a unique constraint on that token. That way, duplicates turn into a harmless no-op instead of a customer support fire.

Example: stabilizing a flaky workflow end to end

Picture a simple workflow: two teammates edit the same customer record, and a background job also updates that record after an import. Everything looks fine in demos, but once real users arrive you start seeing weird results.

Today, the app uses “last write wins”. User A saves, then User B saves a second later and overwrites A’s changes without noticing. Meanwhile, the queue job retries after a timeout and sends the “Customer updated” notification twice.

To confirm it’s non-deterministic (and to fix race conditions instead of guessing), you can create a repeatable repro:

- Open the record in two tabs (Tab A and Tab B)

- Change different fields in each tab

- Click save in both tabs within a second

- Trigger the background job, then force a retry (for example by temporarily throwing an error after sending)

- Check the final record state and count notifications

If each run ends differently, you’ve found the timing bug.

Stabilizing it usually takes two changes. First, make notifications idempotent: add an idempotency key like customerId + eventType + version and store it, so the same notification cannot be sent twice even if a job retries.

Second, make the record update atomic. Wrap the update in a database transaction and add a version check (optimistic locking). If Tab B tries to save an old version, return a clear “This record changed, refresh and try again” message instead of silently overwriting.

Re-test the same repro 50 times. You want identical outcomes every run: one final state you can explain, and exactly one notification.

This is the kind of issue FixMyMess often sees in AI-generated apps: retries and async code exist, but the safety rails (idempotency, locking, transactions) are missing.

Next steps: make the system predictable again

Pick the places where flakiness hurts you most. Don’t start with “the whole app”. Start with the 2-3 critical flows where a bad outcome is expensive, like: charging a card, creating an account, placing an order, sending an email, or updating inventory.

Write those flows down in plain steps (what triggers it, what it calls, what data changes). That small map gives you a shared “truth” when people argue about timing. It also makes it easier to fix race conditions without guessing.

Choose one guardrail you can ship this week. Small changes often remove most of the risk:

- Add an idempotency key to the action that creates something (payments, orders, emails)

- Use a conditional update (only update if the version matches, or if the status is still expected)

- Add a unique constraint so duplicates fail fast and safely

- Enforce ordering for one queue topic (or collapse multiple jobs into one “latest state” job)

- Put a timeout and retry limit where retries currently run forever

If your codebase was generated by AI tools and feels impossible to reason about, plan a focused cleanup: one flow, one owner, and one week to remove hidden shared state and “magic” retries.

Example: if “Create order” sometimes sends two confirmation emails, make the email send idempotent first, then tighten the queue worker so it can safely retry without changing the result.

If you want a fast, clear plan, FixMyMess can run a free code audit to pinpoint race conditions, retries, and unsafe state updates. And if you need stabilization quickly, we can diagnose and repair AI-generated prototypes and prep them for production in 48-72 hours with expert verification.