Server-side input validation for APIs that rejects nonsense

Server-side input validation for APIs keeps bad data out. Learn safe parsing, schemas, strict types, and clear errors that actually protect production.

Why your API keeps accepting nonsense

Most APIs that “accept anything” were built for the happy path. The code assumes the client will send the right fields, in the right shape, with the right types. So when a request arrives with missing fields, extra fields, strings where numbers should be, or a totally different object, the server quietly squeezes it into place, keeps going, and still returns 200.

That’s especially common in AI-generated endpoints. They often deserialize JSON straight into objects, skip strict checks, and rely on “it worked in the demo” data. The result is an API that looks fine in quick tests, but behaves unpredictably when real users, real integrations, or attackers show up.

Client-side checks do not protect you. Browsers can be bypassed, mobile apps can be modified, and third-party clients can send any payload they want. Even well-meaning clients can drift out of sync after a release and start sending fields your server never expected.

In production, weak validation turns into expensive problems:

- Crashes and timeouts when code hits an unexpected type or null

- Bad records that look valid but break reporting and workflows later

- Security bugs when untrusted input reaches queries, file paths, or auth logic

- Debugging pain because the same bad input fails differently in different places

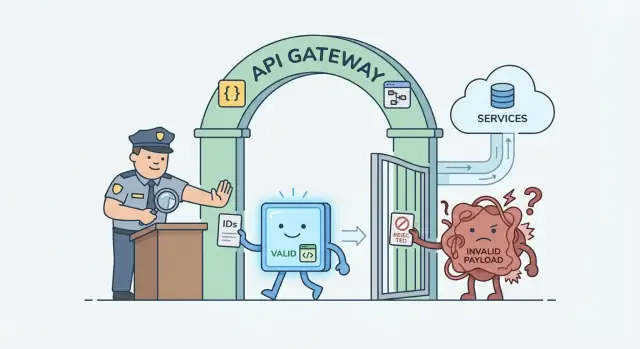

The goal of server-side input validation for APIs is simple: reject bad input early, consistently, and safely. That means one clear gate at the edge of your API that checks shape, types, and limits before your business logic runs.

Teams often come to FixMyMess with endpoints that “work” but accept garbage like empty emails, negative quantities, or objects where an ID should be. The fix is rarely complicated: add strict validation up front so nonsense never enters the system in the first place.

Validation, sanitization, and parsing: the simple difference

If you want an API to stop accepting nonsense, you need to separate three jobs that often get mixed together: validation, sanitization, and parsing.

Validation answers: “Is this input allowed?” It checks shape and rules: required fields, types, ranges, lengths, formats, and allowed values. Good server-side input validation for APIs rejects bad requests early, before they touch your database, auth logic, or third-party calls.

Sanitization answers: “If this input is allowed, how do we make it safe to store or display?” Examples are escaping text before rendering in HTML, stripping control characters, or removing unexpected markup. Sanitization is not a substitute for validation. A cleaned-up string can still be the wrong type, too long, or missing key fields.

Parsing (and normalization) answers: “Turn unknown data into a known shape.” You decode JSON, convert strings to numbers, apply defaults, and normalize formats (like lowercasing an email). The safe pattern is parse, then use. The risky pattern is use, then hope.

A simple example: a signup endpoint receives age: "25" (string), email: "[email protected] ", and role: "admin". A safe flow is:

- Parse and normalize: trim email, lowercase it, convert age to a number

- Validate: age must be 13-120, role must be one of the allowed values

- Only then: create the user

That “strict input contract” (a schema with clear types and limits) is the baseline. It cannot prevent every attack by itself, but it blocks accidental garbage, reduces edge-case bugs, and makes security checks much easier to get right.

Common ways weak input validation breaks real systems

Weak validation rarely fails in a neat way. It usually “works” in testing, then breaks under real traffic because real users, bots, and buggy clients send weird inputs.

One common failure is over-posting. A client sends extra fields you never planned for, and your code accidentally uses them (or stores them) because it spreads the whole request body into a database write. That can flip flags like isAdmin, change pricing fields, or override internal settings without anyone noticing.

Another is type confusion. If you expect a number but accept a string, you get surprises: "10" becomes 10 in one place, stays a string in another, and suddenly sorting, comparisons, and math are wrong. Even worse, "", "NaN", or "001" can slip through and create hard-to-debug edge cases.

Limits are where “happy path” code collapses. Without size checks, a single request can send a 5 MB string, a 50,000-item array, or deeply nested JSON that spikes CPU and memory. The API might not crash every time, but it slows down, times out, and causes cascading failures.

Security issues happen when you trust input too early. If unvalidated data is used in SQL queries, filters, file paths, or HTML output, you open the door to injection and data leaks.

Here are the patterns that show up again and again when server-side input validation for APIs is missing:

- Writing “unknown” fields into the database because the payload is not whitelisted

- Coercing types implicitly and then making decisions on the wrong value

- Allowing unbounded strings/arrays, leading to slowdowns and outages

- Passing raw input into queries or templates before it is checked and parsed

FixMyMess often sees these issues in AI-generated endpoints: they look clean, but accept nearly any JSON and hope downstream code handles it. Real systems need the opposite: reject nonsense early, clearly, and consistently.

Choose a schema approach that fits your stack

The goal of server-side input validation for APIs is simple: every request gets checked against a clear contract before it touches your business logic. The easiest way to do that is to use a schema that can both validate and safely parse inputs into the types your code expects.

If you are in JavaScript or TypeScript, schema libraries like Zod or Joi are popular because they can coerce types carefully (when you allow it) and give readable errors. If you are in Python, Pydantic is a common choice because it turns incoming data into strict models with defaults. If you are already using an OpenAPI-first or JSON Schema workflow, sticking with JSON Schema can keep your docs and validation aligned. Many frameworks also have built-in validators, which can be enough for smaller APIs.

Where you run validation matters. Two common patterns are:

- Middleware that validates body, query, and params before the handler runs

- Validation inside each route handler, close to the code that uses the data

Middleware is easier to keep consistent. Per-handler validation can be clearer when each route has special rules. Either way, try to make the schema the single source of truth for request body, query strings, and path params, so you do not end up validating the body but forgetting the params.

A practical example: an endpoint expects limit (number) in the query and email (string) in the body. Without a schema, AI-generated code often accepts limit="ten" or email=[] and fails later in confusing ways. With a schema, those get rejected immediately with a clean error.

Finally, plan for change. When your API contract evolves, version your schemas the same way you version endpoints or clients. Keep old schemas for old clients, and introduce new schemas with a clear cutover. This is a common fix we see at FixMyMess when teams inherit fast-built AI prototypes and need to make them safe without rewriting everything.

Step-by-step: add server-side validation to one endpoint

Pick one endpoint that causes pain, like POST /users or POST /checkout. The goal is server-side input validation for APIs that rejects nonsense before your business logic runs.

1) Define a small schema (only what you need)

Start with required fields only. If the endpoint creates a user, maybe you truly need email and password, not 12 optional fields “just in case”. Keep the schema strict and explicit.

Validate each input source separately: path params, query params, and body. Treat them as different buckets with different risks.

// Pseudocode schema

const bodySchema = {

email: { type: "email", required: true, maxLen: 254 },

password: { type: "string", required: true, minLen: 12, maxLen: 72 }

};

const querySchema = { invite: { type: "string", required: false, maxLen: 64 } };

const pathSchema = { orgId: { type: "uuid", required: true } };

2) Validate + parse before anything else

Make validation the first lines in the handler. Do not “fix up” random types later.

- Parse body, query, and path separately into typed values

- Reject unknown fields (strict mode) so extra keys do not slip through

- Add bounds: min/max, length limits, and simple format checks

A concrete example: if someone sends { "email": [], "password": true, "role": "admin" }, strict parsing should reject it. It should not coerce types, and it should definitely not accept role if your schema did not allow it.

3) Add tests for bad inputs

One good invalid test is worth ten happy-path tests. Try missing fields, wrong types, extra fields, huge strings, negative numbers, and weird encodings. This is often where AI-generated endpoints fail, and it is the kind of fix teams bring to FixMyMess when a prototype hits production reality.

Safe parsing patterns that keep bugs out

Most bugs are not caused by “missing validation”, but by what happens after validation. The safest habit is to parse the input once, get a clear success or failure, and then only work with the parsed result.

Parse first, then forget the raw request

A good schema library gives you a “safe parse” style API: it returns either a parsed value you can trust or an error you can return to the client. This is the core of server-side input validation for APIs.

// Example shape, works similarly in many schema libraries

const result = UserCreateSchema.safeParse(req.body);

if (!result.success) {

return res.status(400).json({ error: "Invalid input", fields: result.error.fields });

}

const input = result.data; // only use this from now on

// Never touch req.body again in this handler

createUser({ email: input.email, age: input.age });

This one change prevents a common “happy path” bug in AI-generated endpoints: the code validates, but then later reads from req.body anyway and accidentally accepts extra fields, wrong types, or weird edge cases.

Normalize only when you mean it

Normalization can be helpful, but it should be a deliberate choice, not an accident.

- Trim strings only for fields where spaces are never meaningful (emails, usernames).

- Pick one casing rule when comparisons matter (for example, lowercase emails).

- Convert dates from strings to Date objects only if your schema guarantees the format.

- Keep IDs exact; do not auto-trim or change them unless your system already enforces it.

Avoid “magic coercion”

Be careful with coercion like turning "123" into 123. It hides bad inputs and makes it harder to spot client bugs or abuse. Coerce only when you truly need it (like query params that are always strings), and when you do, enforce limits (min, max, integer-only) so you do not accept nonsense like "999999999999".

If you inherited an AI-built API that “works” but accepts garbage, this parse-then-use-only-parsed-data pattern is one of the fastest fixes to apply safely.

Strict types, defaults, and limits that matter

Strict validation is not only about “is this field present.” It is about making your API accept only the shapes and values you are willing to support. Good server-side input validation for APIs starts at the boundary: the request body, query string, and headers.

Defaults belong at the boundary

Set defaults during parsing, not deep in your business logic. That way, everything after the boundary can assume a complete, known shape.

Example: if page is missing, default it to 1 while parsing. Do not let random parts of the code decide later, because you will end up with different behavior in different endpoints.

Also decide what “missing” means. A missing field is not the same as a field set to null.

- Optional: the client may omit it (ex:

middleNamenot provided). - Nullable: the client may send

null(ex:deletedAt: nullwhen not deleted).

Treat these as different cases in your schema. Otherwise you will get odd bugs like null passing validation but breaking code that expects a string.

Enums and limits stop entire classes of bugs

If you know the allowed values, say so. Enums prevent “close enough” strings (like adminn) from sneaking in and creating hidden states.

A realistic example: an AI-generated endpoint takes status and sort from query params. Without strict types, status=donee might be treated as truthy and return the wrong records, and sort=DROP TABLE might get concatenated into a query.

Add limits that protect your database and your wallet:

- Cap

limit(for example, max 100) - Clamp

pageto 1+ - Allowlist

sortByfields (ex:createdAt,name) - Restrict

ordertoascordesc - Set max string lengths (names, emails, search terms)

These are common fixes we apply when repairing AI-built prototypes at FixMyMess, because “happy path” code often accepts anything and only fails in production when real users send real chaos.

Helpful error responses without oversharing

When input is wrong, your API should be clear and boring: return a 400, explain what to fix, and keep the response shape the same every time. A stable shape helps clients handle errors without special cases, and it keeps your team from accidentally leaking details later.

A simple pattern is an error envelope plus field-level details:

{

"error": {

"code": "INVALID_INPUT",

"message": "Some fields are invalid.",

"fields": [

{ "path": "email", "message": "Must be a valid email address." },

{ "path": "age", "message": "Must be an integer between 13 and 120." }

]

}

}

Keep messages focused on what the caller can change. Avoid echoing back raw input (especially strings that might contain HTML or SQL) and never include stack traces, SQL errors, internal IDs, or library names. Instead of "ZodError: Expected number, received string", say "age must be a number".

Schema libraries often give you detailed errors. Map them into your API format and keep the field paths predictable (dot paths work well). If there are many issues, cap the number you return (for example, the first 10) so the response stays small.

For your own debugging, log validation failures safely. Record:

- request ID and endpoint

- field paths that failed (not raw values)

- caller metadata you already trust (user ID, tenant)

- a redacted snapshot of the payload

This is a common fix in AI-generated API code: the UI gets friendly feedback, while your logs still explain why requests were rejected, without exposing secrets.

Common traps when adding validation

The biggest mistake is validating too late. If you read fields, build a SQL query, or write to storage and only then check the values, the damage is already done. Validation needs to happen at the boundary: parse, validate, and only then touch the rest of your code.

Another common trap is trusting types that only exist at compile time. AI-written code often looks “typed” but still accepts anything at runtime. A UserId: string annotation does not stop "", " ", or "DROP TABLE" from arriving. Server-side input validation for APIs has to run on every request, not just in your editor.

“Allow unknown fields for flexibility” also tends to backfire. Extra fields become a hiding place for bugs, confused clients, and security issues (like a stray role: "admin" getting merged into your user object). If you need forward compatibility, accept only known fields and version the API when the shape changes.

Frameworks can sabotage your intent by silently coercing types. If "false" becomes true, or "123abc" becomes 123, you will ship logic bugs that are hard to reproduce. Prefer strict parsing that fails fast with a clear error.

Finally, many teams forget limits, so validation passes but your server still suffers. A few basic guards prevent abuse and accidents:

- Cap body size and reject oversized payloads early.

- Set max lengths for strings (names, emails, IDs).

- Limit arrays (items per request) and object nesting depth.

- Require explicit formats (UUIDs, ISO dates) and reject “close enough”.

- Keep defaults intentional; don’t auto-fill missing fields in ways that hide client bugs.

A practical example: an AI-generated endpoint that “updates profile” might accept a 5MB bio, a nested object where a string was expected, and unknown keys that overwrite stored data. This is the kind of cleanup FixMyMess often finds in audits: validation exists, but it is permissive in exactly the wrong places.

Quick checklist for safer API inputs

If you want server-side input validation for APIs that actually blocks bad data, keep a short checklist and run it on every endpoint you touch. Most “it works on my machine” API bugs come from skipping one of these basics.

Start by validating every input surface, not just JSON bodies. Query strings and route params are untrusted too, and they often end up controlling filters, IDs, and pagination.

Use this checklist as your minimum bar:

- Validate body, query, and params before any business logic or database calls.

- Prefer allowlists: reject unknown fields unless you explicitly need flexible payloads.

- Put hard limits everywhere: max string length, numeric ranges, array sizes, and pagination caps.

- Parse into a typed, validated object and only use that parsed output. If parsing fails, fail closed.

- Add a few nasty test cases per endpoint, including wrong types, missing required fields, extra fields, and oversized inputs.

A quick example: your endpoint expects { "email": "...", "age": 18 }. A real request might send age: "18", age: -1, or add "isAdmin": true. If you silently coerce types or accept extra keys, you are training your system to accept nonsense and, worse, privilege changes.

If you inherited AI-generated API code, this checklist is where fixes often start. Teams working with FixMyMess usually find the same pattern: the “happy path” works, but the server trusts whatever shows up. Tight validation plus strict parsing is one of the fastest ways to turn a fragile prototype into something you can safely run in production.

Example: fixing an AI-built endpoint that accepts garbage

A common story: an AI tool generates a /signup endpoint that "works locally" because you only tried the happy path. In production, it starts accepting nonsense, and the damage is quiet.

Here’s a real failure pattern. The endpoint expects:

email(string)password(string)plan("starter" | "pro")

But the API happily accepts this payload:

{ "email": ["[email protected]"], "password": true, "plan": "pro ", "coupon": {"code":"FREE"} }

What happens next is messy: the email ends up as "[email protected]" (stringified array), the boolean password gets coerced, the plan has a trailing space so pricing falls back to a default, and the unexpected coupon object might get stored as "[object Object]" or crash a later job. You see broken auth, wrong billing, and corrupted rows that are hard to clean up.

The fix is not "sanitize everything". It is server-side input validation for APIs with strict parsing.

Walk-through fix

Define a schema that rejects unknown fields, trims and normalizes strings, and enforces types. Then parse before doing any work.

- Validate required fields and types (no coercion by default)

- Normalize safe values (trim

plan, lowercaseemail) - Reject extras (drop or fail on

coupon) - Apply limits (max lengths, password rules)

When parsing fails, return a clear 400:

"Invalid request: plan must be one of starter, pro; email must be a string; unexpected field coupon"

End result: fewer production incidents, cleaner data, and faster debugging because bad inputs stop at the door. If you inherited an AI-built endpoint like this, FixMyMess typically finds these weak spots during a quick audit and patches them without rewriting the whole app.

Next steps: improve safety without rewriting everything

If you want server-side input validation for APIs to pay off fast, don’t start by “validating everything.” Start where bad inputs cause real damage: endpoints that write data or trigger expensive work.

Pick three endpoints first. A good set is login or signup (auth), anything that touches money (payments), and any admin action. Add validation right at the boundary (request body, query, headers) before you touch your database, files, queues, or third-party APIs. That one move prevents most “accepted nonsense” bugs.

A simple rollout plan that avoids a rewrite:

- Add one schema per endpoint and reject unknown fields by default.

- Set tight limits (string length, array size, numeric ranges, max page size).

- Normalize and parse once (trim, date parsing, number coercion only when intentional).

- Add monitoring for validation failures so you see what clients are sending.

Legacy clients are the tricky part. If you can’t break them overnight, allow temporary leniency in a controlled way: accept the old shape, but convert it into the new strict shape internally, and log every time you had to “rescue” the input. Put an explicit deprecation date on that path so it doesn’t become permanent.

If your API was generated by an AI tool and the code already feels tangled (copy-pasted handlers, inconsistent types, hidden auth bugs), a focused audit is often faster than patching endpoint-by-endpoint. FixMyMess can do a free code audit and then repair the highest-risk API paths (validation, auth, secrets, and security issues) so you get to “safe by default” without a full rebuild.